Who would have thought that an artificial intelligence trained on human intelligence would be just as dumb

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

Hm. This is what I got.

I think about 90% of the screenshots we see of LLMs failing hilariously are doctored. Lemmy users really want to believe it's that bad through.

Edit:

I've had lots of great experiences with ChatGPT, and I've also had it hallucinate things.

I saw someone post an image of a simplified riddle, where ChatGPT tried to solve it as if it were the entire riddle, but it added extra restrictions and have a confusing response. I tried it for myself and got an even better answer.

Prompt (no prior context except saying I have a riddle for it):

A man and a goat are on one side of the river. They have a boat. How can they go across?

Response:

The man takes the goat across the river first, then he returns alone and takes the boat across again. Finally, he brings the goat's friend, Mr. Cabbage, across the river.

I wish I was witty enough to make this up.

Yesterday, someone posted a doctored one on here saying everyone eats it up even if you use a ridiculous font in your poorly doctored photo. People who want to believe are quite easy to fool.

Holy fuck did it just pass the Turing test?

“Major new Technology still in Infancy Needs Improvements”

-- headline every fucking day

"Corporation using immature technology in productions because it's cool"

More news at eleven

This is scary because up to now, all software released worked exactly as intended so we need to be extra special careful here.

unready technology that spews dangerous misinformation in the most convincing way possible is being massively promoted

in Infancy Needs Improvements

I'm just gonna go out on a limb and say that if we have to invest in new energy sources just to make these tools functionably usable... maybe we're better off just paying people to do these jobs instead of burning the planet to a rocky dead husk to achieve AI?

The way I see it, we’re finally sliding down the trough of disillusionment.

You have no idea how many times I mentioned this observation from my own experience and people attacked me like I called their baby ugly

ChatGPT in its current form is good help, but nowhere ready to actually replace anyone

A lot of firms are trying to outsource their dev work overseas to communities of non-English speakers, and then handing the result off to a tiny support team.

ChatGPT lets the cheap low skill workers churn out miles of spaghetti code in short order, creating the illusion of efficiency for people who don't know (or care) what they're buying.

Yeap.... Another brilliant short term strategy to catch a few eager fools that won't last mid term

GPT-2 came out a little more than 5 years ago, it answered 0% of questions accurately and couldn't string a sentence together.

GPT-3 came out a little less than 4 years ago and was kind of a neat party trick, but I'm pretty sure answered ~0% of programming questions correctly.

GPT-4 came out a little less than 2 years ago and can answer 48% of programming questions accurately.

I'm not talking about mortality, or creativity, or good/bad for humanity, but if you don't see a trajectory here, I don't know what to tell you.

Seeing the trajectory is not ultimate answer to anything.

Perhaps there is some line between assuming infinite growth and declaring that this technology that is not quite good enough right now will therefore never be good enough?

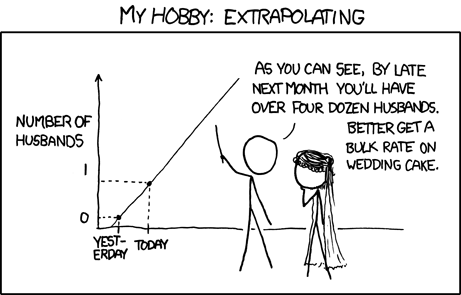

Blindly assuming no further technological advancements seems equally as foolish to me as assuming perpetual exponential growth. Ironically, our ability to extrapolate from limited information is a huge part of human intelligence that AI hasn't solved yet.

I appreciate the XKCD comic, but I think you're exaggerating that other commenter's intent.

The tech has been improving, and there's no obvious reason to assume that we've reached the peak already. Nor is the other commenter saying we went from 0 to 1 and so now we're going to see something 400x as good.

Speaking at a Bloomberg event on the sidelines of the World Economic Forum's annual meeting in Davos, Altman said the silver lining is that more climate-friendly sources of energy, particularly nuclear fusion or cheaper solar power and storage, are the way forward for AI.

"There's no way to get there without a breakthrough," he said. "It motivates us to go invest more in fusion."

It's a good trajectory, but when you have people running these companies saying that we need "energy breakthroughs" to power something that gives more accurate answers in the face of a world that's already experiencing serious issues arising from climate change...

It just seems foolhardy if we have to burn the planet down to get to 80% accuracy.

I'm glad Altman is at least promoting nuclear, but at the same time, he has his fingers deep in a nuclear energy company, so it's not like this isn't something he might be pushing because it benefits him directly. He's not promoting nuclear because he cares about humanity, he's promoting nuclear because has deep investment in nuclear energy. That seems like just one more capitalist trying to corner the market for themselves.

We are running these things on computers not designed for this. Right now, there are ASICs being built that are specifically designed for it, and traditionally, ASICs give about 5 orders of magnitude of efficiency gains.

So this issue for me is this:

If these technologies still require large amounts of human intervention to make them usable then why are we expending so much energy on solutions that still require human intervention to make them usable?

Why not skip the burning the planet to a crisp for half-formed technology that can't give consistent results and instead just pay people a living fucking wage to do the job in the first place?

Seriously, one of the biggest jokes in computer science is that debugging other people's code gives you worse headaches than migraines.

So now we're supposed to dump insane amounts of money and energy (as in burning fossil fuels and needing so much energy they're pushing for a nuclear resurgence) into a tool that results in... having to debug other people's code?

They've literally turned all of programming into the worst aspect of programming for barely any fucking improvement over just letting humans do it.

Why do we think it's important to burn the planet to a crisp in pursuit of this when humans can already fucking make art and code? Especially when we still need humans to fix the fucking AIs work to make it functionally usable. That's still a lot of fucking work expected of humans for a "tool" that's demanding more energy sources than currently exists.

They don't require as much human intervention to make the results usable as would be required if the tool didn't exist at all.

Compilers produce machine code, but require human intervention to write the programs that they compile to machine code. Are compilers useless wastes of energy?

I honestly don't know how well AI is going to scale when it comes to power consumption vs performance. If it's like most of the progress we've seen in hardware and software over the years, it could be very promising. On the other hand, past performance is no guarantee for future performance. And your concerns are quite valid. It uses an absurd amount of resources.

The usual AI squad may jump in here with their usual unbridled enthusiasm and copium that other jobs are under threat, but my job is safe, because I'm special.

Eye roll.

Meanwhile, thousands have been laid off already, and executives and shareholders are drooling at the possibility of thinning the workforce even more. Those who think AI will create as many jobs as it destroys are thinking wishfully. Assuming it scales well, it could spell massive layoffs. Some experts predict tens of millions of jobs lost to AI by 2030.

To try and answer the other part of your question...at my job (which is very technical and related to healthcare) we have found AI to be extremely useful. Using Google to search for answers to problems pales by comparison. AI has saved us a lot of time and effort. I can easily imagine us cutting staff eventually, and we're a small shop.

The future will be a fascinating mix of good and bad when it comes to AI. Some things are quite predictable. Like the loss of creative jobs in art, music, animation, etc. And canned response type jobs like help desk chat, etc. The future of other things like software development, healthcare, accounting, and so on are a lot murkier. But no job (that isn't very hands-on-physical) is 100% safe. Especially in sectors with high demand and low supply of workers. Some of these models understand incredibly complex things like drug interactions. It's going to be a wild ride.

Yeah it's wrong a lot but as a developer, damn it's useful. I use Gemini for asking questions and Copilot in my IDE personally, and it's really good at doing mundane text editing bullshit quickly and writing boilerplate, which is a massive time saver. Gemini has at least pointed me in the right direction with quite obscure issues or helped pinpoint the cause of hidden bugs many times. I treat it like an intelligent rubber duck rather than expecting it to just solve everything for me outright.

I tend to agree, but I've found that most LLMs are worse than I am with regex, and that's quite the achievement considering how bad I am with them.

I will resort to ChatGPT for coding help every so often. I'm a fairly experienced programmer, so my questions usually tend to be somewhat complex. I've found that's it's extremely useful for those problems that fall into the category of "I could solve this myself in 2 hours, or I could ask AI to solve it for me in seconds." Usually, I'll get a working solution, but almost every single time, it's not a good solution. It provides a great starting-off point to write my own code.

Some of the issues I've found (speaking as a C++ developer) are: Variables not declared "const," extremely inefficient use of data structures, ignoring modern language features, ignoring parallelism, using an improper data type, etc.

ChatGPT is great for generating ideas, but it's going to be a while before it can actually replace a human developer. Producing code that works isn't hard; producing code that's good requires experience.

My experience with an AI coding tool today.

Me: Can you optimize this method.

AI: Okay, here's an optimized method.

Me seeing the AI completely removed a critical conditional check.

Me: Hey, you completely removed this check with variable xyz

Ai: oops you're right, here you go I fixed it.

It did this 3 times on 3 different optimization requests.

It was 0 for 3

Although there was some good suggestions in the suggestions once you get past the blatant first error

Don't mean to victim blame but i don't understand why you would use ChatGPT for hard problems like optimization. And i say this as a heavy ChatGPT/Copilot user.

From my observation, the angle of LLMs on code is linked to the linguistic / syntactic aspects, not to the technical effects of it.

ChatGPT and github copilot are great tools, but they're like a chainsaw: if you apply them incorrectly or become too casual and careless with them, they will kickback at you and fuck your day up.

If you don't know what you are doing, and you give it a vague request hoping it will automatically solve your problem, then you will just have to spend even more time to debug its given code.

However, if you know exactly what needs do do, and give it a good prompt, then it will reward you with a very well written code, clean implementation and comments. Consider it an intern or junior developer.

Example of bad prompt: My code won't work [paste the code], I keep having this error [paste the error log], please help me

Example of (reasonably) good prompt: This code introduces deep recursion and can sometimes cause a "maximum stack size exceeded" error in certain cases. Please help me convert it to use a while loop instead.

I wouldn't trust an LLM to produce any kind of programming answer. If you're skilled enough to know it's wrong, then you should do it yourself, if you're not, then you shouldn't be using it.

I've seen plenty of examples of specific, clear, simple prompts that an LLM absolutely butchered by using libraries, functions, classes, and APIs that don't exist. Likewise with code analysis where it invented bugs that literally did not exist in the actual code.

LLMs don't have a holistic understanding of anything—they're your non-programming, but over-confident, friend that's trying to convey the results of a Google search on low-level memory management in C++.

If you're skilled enough to know it's wrong, then you should do it yourself, if you're not, then you shouldn't be using it.

Oh I strongly disagree. I’ve been building software for 30 years. I use copilot in vscode and it writes so much of the tedious code and comments for me. Really saves me a lot of time, allowing me to spend more time on the complicated bits.

I'm closing in on 30 years too, started just around '95, and I have yet to see an LLM spit out anything useful that I would actually feel comfortable committing to a project. Usually you end up having to spend as much time—if not more—double-checking and correcting the LLM's output as you would writing the code yourself. (Full disclosure: I haven't tried Copilot, so it's possible that it's different from Bard/Gemini, ChatGPT and what-have-you, but I'd be surprised if it was that different.)

Here's a good example of how an LLM doesn't really understand code in context and thus finds a "bug" that's literally mitigated in the line before the one where it spots the potential bug: https://daniel.haxx.se/blog/2024/01/02/the-i-in-llm-stands-for-intelligence/ (see "Exhibit B", which links to: https://hackerone.com/reports/2298307, which is the actual HackerOne report).

LLMs don't understand code. It's literally your "helpful", non-programmer friend—on stereoids—cobbling together bits and pieces from searches on SO, Reddit, DevShed, etc. and hoping the answer will make you impressed with him. Reading the study from TFA (https://dl.acm.org/doi/pdf/10.1145/3613904.3642596, §§5.1-5.2 in particular) only cements this position further for me.

And that's not even touching upon the other issues (like copyright, licensing, etc.) with LLM-generated code that led to NetBSD simply forbidding it in their commit guidelines: https://mastodon.sdf.org/@netbsd/112446618914747900

Edit: Spelling

Example of (reasonably) good prompt: This code introduces deep recursion and can sometimes cause a "maximum stack size exceeded" error in certain cases. Please help me convert it to use a

whileloop instead.

That sounds like those cases on YouTube where the correction to the code was shorter than the prompt hahaha

What drives me crazy about its programming responses is how awful the html it suggests is. Vast majority of its answers are inaccessible. If anything, a LLM should be able to process and reconcile the correct choices for semantic html better than a human... but it doesnt because its not trained on WIA-ARIA... its trained on random reddit and stack overflow results and packages those up in nice sounding words. And its not entirely that the training data wants to be inaccessible... a lot of it is just example code wothout any intent to be accessible anyway. Which is the problem. LLM's dont know what the context is for something presented as a minimal example vs something presented as an ideal solution, at least, not without careful training. These generalized models dont spend a lot of time on the tuned training for a particular task because that would counteract the "generalized" capabilities.

Sure, its annoying if it doesnt give a fully formed solution of some python or js or whatever to perform a task. Sometimes it'll go way overboard (it loves to tell you to extend js object methods with slight tweaks, rather than use built in methods, for instance, which is a really bad practice but will get the job done)

We already have a massive issue with inaccessible web sites and this tech is just pushing a bunch of people who may already be unaware of accessible html best practices to write even more inaccessible html, confidently.

But hey, thats what capitalism is good for right? Making money on half-baked promises and screwing over the disabled. they arent profitable, anyway.

I just use it to get ideas about how to do something or ask it to write short functions for stuff i wouldnt know that well. I tried using it to create graphical ui for script but that was constant struggle to keep it on track. It managed to create something that kind of worked but it was like trying to hold 2 magnets of opposing polarity together and I had to constantly reset the conversation after it got "corrupted".

Its useful tool if you dont rely on it, use it correctly and dont trust it too much.

I guess it depends on the programming language.. With python, I got very fast great results. But python is all about quick and dirty 😂

People down vote me when I point this out in response to "AI will take our jobs" doomerism.

I mean, AI eventually will take our jobs, and with any luck it'll be a good thing when that happens. Just because Chat GPT v3 (or w/e) isn't up to the task doesn't mean v12 won't be.

Rookey numbers! If we can't get those numbers up to at least 75% by next quarter, then the whippings will occur until misinformation increases!

I always thought of it as a tool to write boilerplate faster, so no surprises for me

They’ve done studies: 48% of the time, it works every time.

Sure does, but even when wrong it still gives a good start. Meaning in writing less syntax.

Particularly for boring stuff.

Example: My boss is a fan of useMemo in react, not bothered about the overhead, so I just write a comment for the repetitive stuff like sorting easier to write

// Sort members by last name ascending

And then pressing return a few times. Plus with integration in to Visual Studio Professional it will learn from your other files so if you have coding standards it’s great for that.

Is it perfect? No. Does it same time and allow us to actually solve complex problems? Yes.