this post was submitted on 27 Aug 2023

1022 points (97.1% liked)

Memes

45550 readers

1864 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

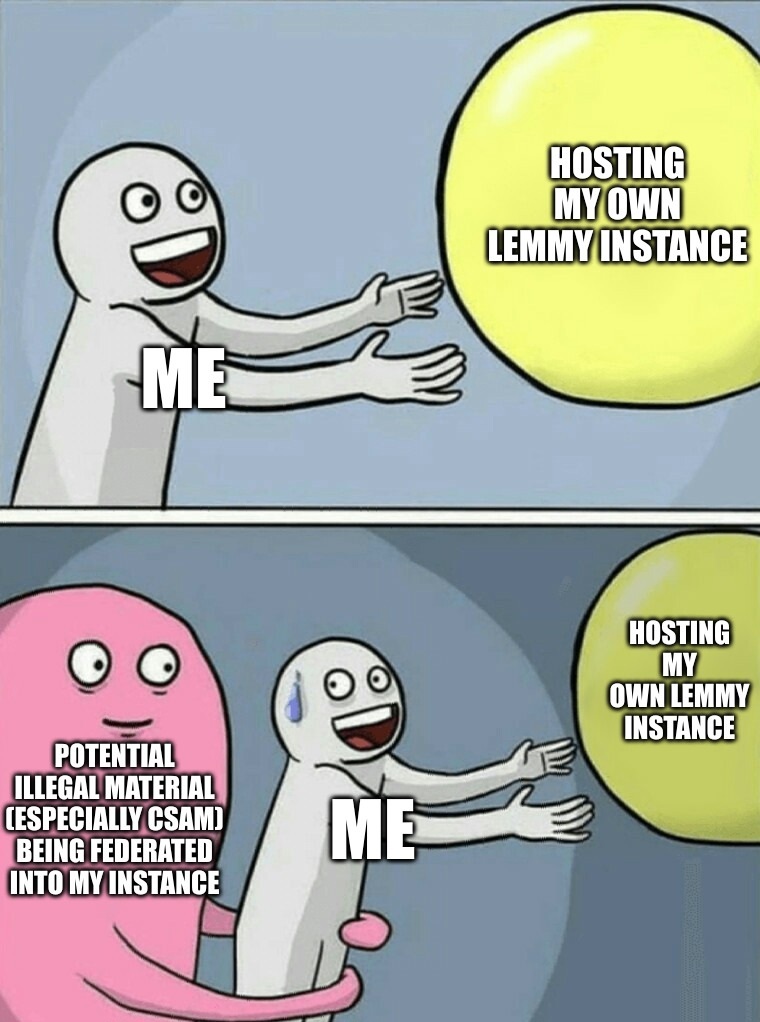

This is the biggest design flaw of lemmy.

Instances should host separate content, and aggregation of separate instances should be up the client.

Instead we got the worst of all worlds. It means that lemmy can never truly scale performance wise or survive legal wise.

Hopefully they solve it in some way, but I don't see how unless they do the above and totally remove cross instance caching

Yea you'll have a problem with overloaded requests

Example:

A post is located at Instance A, it has 100 users and can handle up to 150 connections at a time

Instance B has 100 users

Instance C has 100 users

Assuming all these users are from the same timezone and have the same one hour of free time after coming home from work

All users at Instance B and C wants to see the post at Instance A

200 requests gets sent to Instance A

Instance A also receive 100 requests from it's own users

Instance A receives a total of 300 requests at around the same time. Instance is overloaded.

All of Intances A, B, and C would need at least 300 connection capacity in order for this to work.

Imaging there being 10 instances. All of them would need at least 1000 connection capacity. Each one of them.

Instances need to cache other instances to reduce redundant requests. This way the requests are reduced to 1. Only one copy needs to be sent to each other instance, instead of being sent to each individual user of those instances.

All 100 of each user at an instance could just use that 1 copy at their instance.

So now in this scenario:

Instance A has a post everyone wants to see, Instance A gets 100 requests + 1 from each other instance. Even with 10 total instances (including themself), there'd be only 109 requests, a far more manageable number than 1000.