this post was submitted on 02 Oct 2024

171 points (95.2% liked)

Technology

59374 readers

7416 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

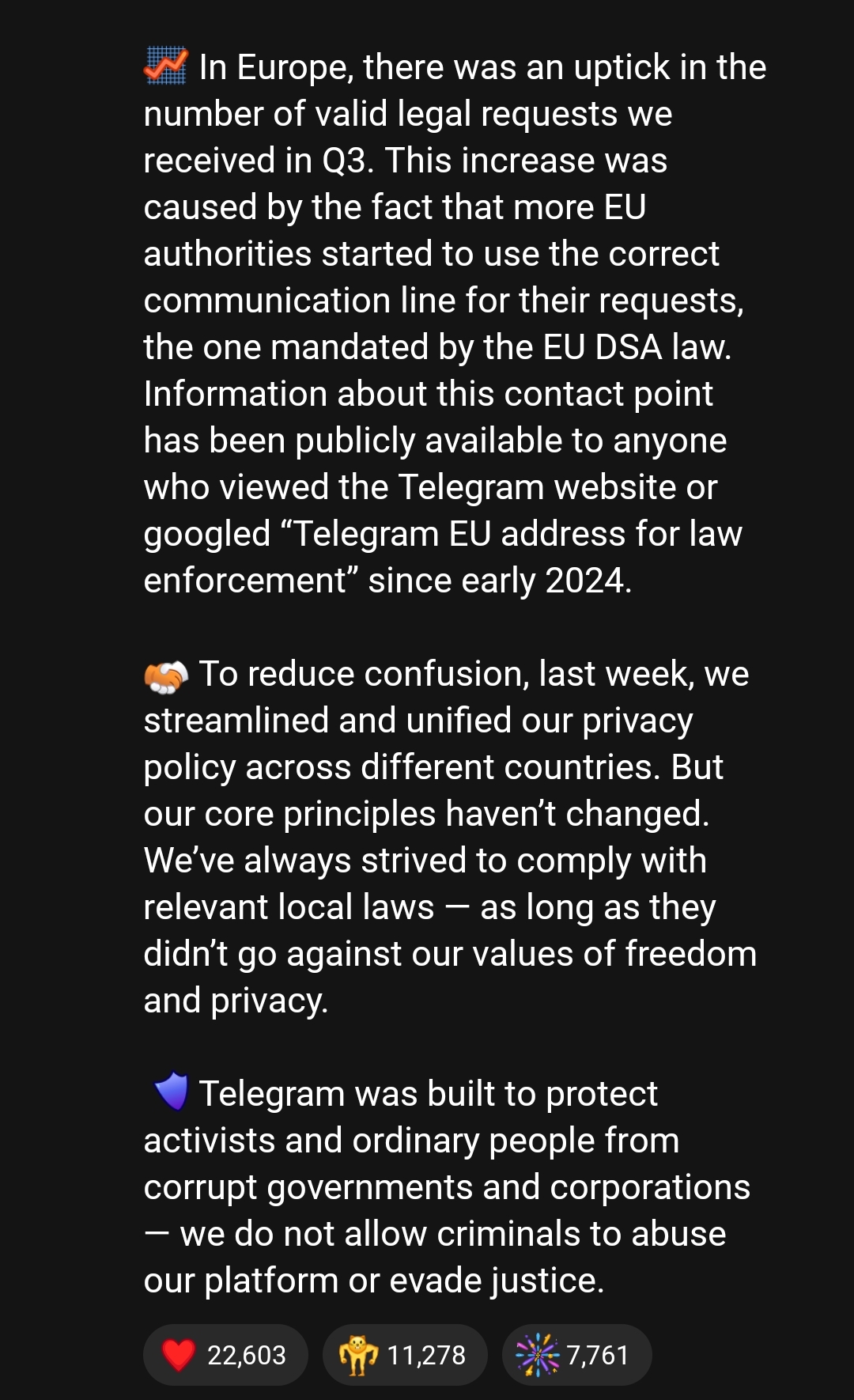

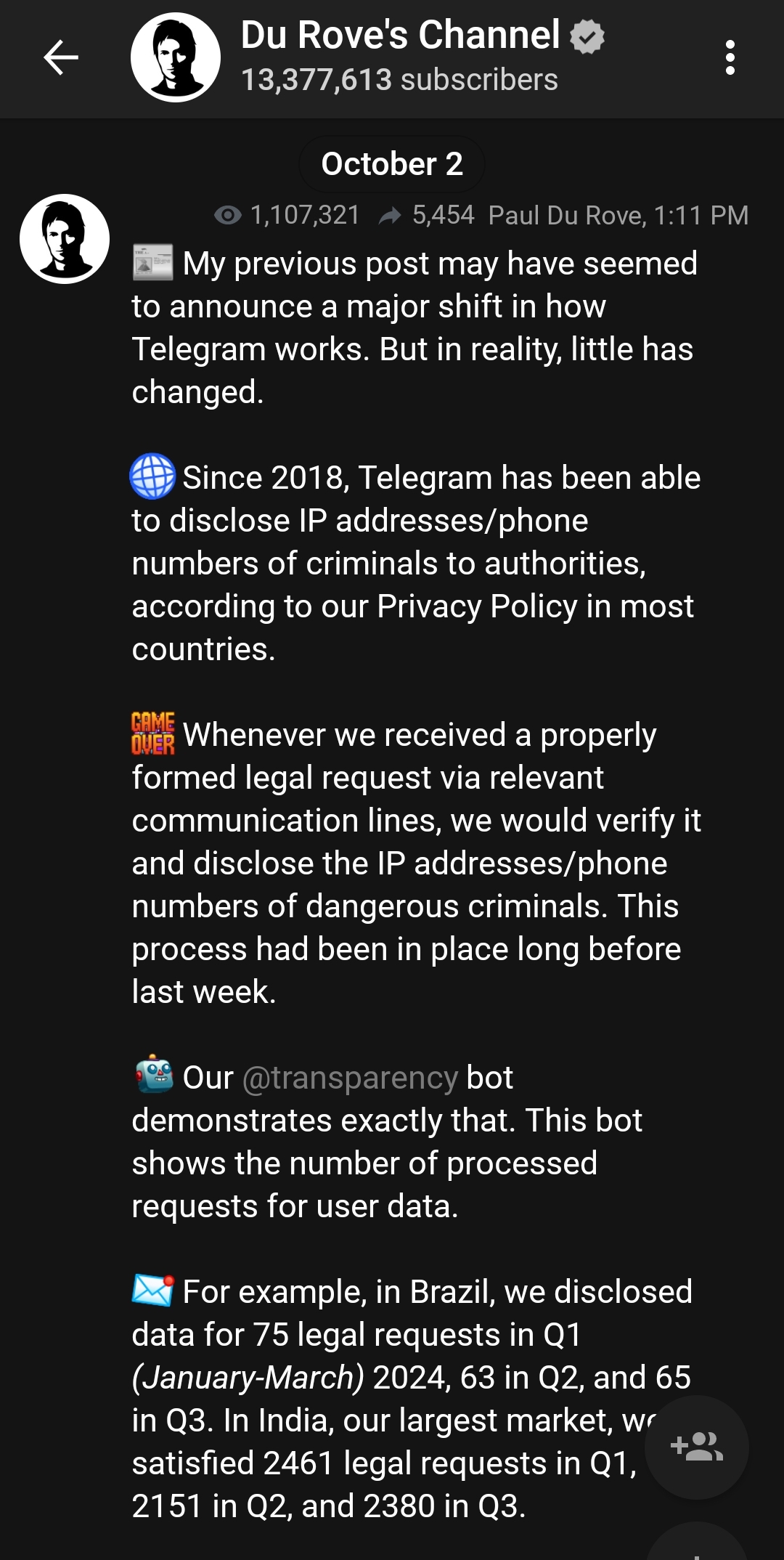

So who gets to pick what's a lawful request and criminal activity? It's criminal in some states to seek an abortion or help with an abortion, so would they hand out the IPs of those "criminals"? Because depending on who you ask some will tell you they're basically murderers. And that's just one example.

Good privacy apps have nothing to hand out to any government, like Signal.

Exactly. The strive for zero knowledge is the proper way to be going.

But then you can't sell your customer's data for profit. Even if you don't now, you still have that option in the future.

Exactly. Which is the entire reason you should do it. Since you can't sell your customers for profit, that means you have to profit off of your customers. And another business could start up and compete with you. Also, your customers will trust you more.

The second I went to sign up and learned a phone number was absolutely required, I knew that their privacy was pure bullshit. That little declaration at the end here is an absolute slap to the face.

Signal requires that as well. Their privacy is definitely not bullshit. As far as I can tell, it's a spam mitigation method. But yeah, Telegram is pretty much the very bottom of privacy. Even Meta now encrypts all messages across all platforms.

It's bad for privacy no matter how you sell it. Unless you have a good amount of disposable income to buy up burner numbers all the time, a phone number tends to be incredibly identifying. So if a government agency comes along saying "Hey, we know this account sent this message and you have to give us everything you have about this account," for the average person, it doesn't end up being that different than having given them your full id.

Another aspect is the social graph. It's targeted for normies to easily switch to.

https://signal.org/blog/private-contact-discovery/

By using phone numbers, you can message your friends without needing to have them all register usernames and tell them to you. It also means Signal doesn't need to keep a copy of your contact list on their servers, everyone has their local contact list.

This means private messages for loads of people, their goal.

It's a bit backwards, since your account is your phone number, the agency would be asking "give us everything you have from this number". They've already IDed you at that point.

Yep, at that point they're just fishing for more which, hey, why wouldn't they.

It's a give and take for sure, requiring a real phone number makes it harder for automated spam bots to use the service, but at the same time, it puts the weight of true privacy on the shoulders and wallets of the users, and in a lesser way, incentives the use of less than reputable services, should a user want to truly keep their activities private.

And yeah, there's an argument to be made for keeping crime at bay, but that also comes with risks itself. If there was some way to keep truly egregious use at bay while not risking a $10,000 fine on someone for downloading an episode of Ms. Marvel, I think that would be great.

I mean it's not ideal but as long as it's not tied to literally any other information, the way Signal does it, it's "fine", and certainly not "bad" and especially not "pure bullshit".

They have done this several times, they give them nothing because they have nothing.

Says right there in the subpoena "You are required to provide all information tied to the following phone numbers." This means that the phone number requirement has already created a leak of private information in this instance, Signal simply couldn't add more to it.

Additionally, that was posted in 2021. Since then, Signal has introduced usernames to "keep your phone number private." Good for your average Joe Blow, but should another subpoena be submitted, now stating "You are required to provide all information tied to the following usernames," this time they will have something to give, being the user's phone number, which can then be used to tie any use of Signal they already have proof of back to the individual.

Yeah, it's great that they don't log what you send, but that doesn't help if they get proof in any other way. The fact is, because of the phone number requirement, anything you ever send on Signal can easily be tied back to you should it get out, and that subpoena alone is proof that it does.

What information? The gov already had the phone number. They needed it to make the request.

Here's a more recent one.. Matter of fact, here's a full list of all of them. Notice the lack of any usernames provided.

Also note that a bunch of the numbers they requested weren't even registered with Signal, so the gov didn't even know if they were using the app and were just throwing shit at the wall and seeing what sticks.

They can't respond to requests for usernames because they don't know any of them. From Signal: "Once again, Signal doesn’t have access to your messages; your calls; your chat list; your files and attachments; your stories; your groups; your contacts; your stickers; your profile name or avatar; your reactions; or even the animated GIFs you search for – and it’s impossible to turn over any data that we never had access to in the first place."

What else ya got?

If they're getting evidence outside of Signal, that's outside the scope of this discussion.

...no. It can't.

It's proof that it doesn't.

Guys like you see privacy as a monolith, that it never is. Unusable privacy is meanigless as email had shown. Privacy of communications does not mean privacy of communicators and usable authentication can be more important then anonymity.

And all this has to be realised on real-world servers, that are always in reach of real world goverment.

In the US, agents must petition a judge for a search warrant. If granted, the agent may then compel an IT company to produce. If they are able, they must comply. It isn't up to the CEO to decide what he feels is right.

Look for services that allow your data to be encrypted, but it must also clearly state the service provider does not have the encryption keys -- you do. Apple does this, I believe.

Probably Telegram themselves. Durov was forced into exile by Putin.

The...law?

Of course they will. If they don't, they'll be arrested. Which is exactly what happened.

In which country?

The country in which the perpetrator lives or the crime was committed. First time using the internet?

In your opinion, all companies must disclose the personal information of customers whenever a Government says "This person broke the law"?

None of this is my opinion, it's just how the world works LOL

Not necessarily, but kinda. The gov typically need some sort of warrant, and they need approval from the country they're requesting it from. (I don't know all the legal terms here). The provider can contest it. Look at the disclosures of your favorite international tech company, most of them make this information public (except when the gov specifically tells them they can't until they change their mind later).

Here's one from Proton

Can you elaborate?

Which Government?

Pardon my ignorance as this is my first time using the internet, but I am pretty sure that every Government on the planet does not use a universal set of laws or procedures for enforcement.

I just did.

I already answered this one as well.

No but they all certainly have some sort of system for requesting access to information.

So in your world, journalists and activists trying to bring attention to human rights violations their country's fascist government is committing in an attempt to bring in good change should be just fucked over right?

Because those governments label those people as "criminals" when they're objectively not.

I'll refer you to my previous comment:

Notice at no time did I use the words "should" or "should not". We're just discussing facts here.

I love how you get downvoted by people who live in some sort of fictitious world. Kind of like the sovereign citizen nonsense.

This may be of some use to you.

https://www.merriam-webster.com/dictionary/elaborate

United States of America? Canada? North Korea? China? Australia? Saudi Arabia? South Africa? Brazil?

The point is the app was designed for secure communication, specifically from corrupt governments, which is why it is problematic to allow access to user data as long as the individual is breaking a law in that country.

Or to use the example from the top:

Can you elaborate on what you're asking me to elaborate on, because I honestly don't know beyond what I've already told you.

Yes. Any of these could potentially be "the country they're requesting it from".

If you think that's true, you are sorely mistaken. It may be how it is advertised, but it is not how it was designed. If it were designed that way, as many many different chat apps are, they would have no information to give up to a subpoena. AKA the "zero knowledge" encryption that was mentioned previously.

I agree. For the third time, this is not my opinion, this is just how the world works.

Or to use my answer from the top: