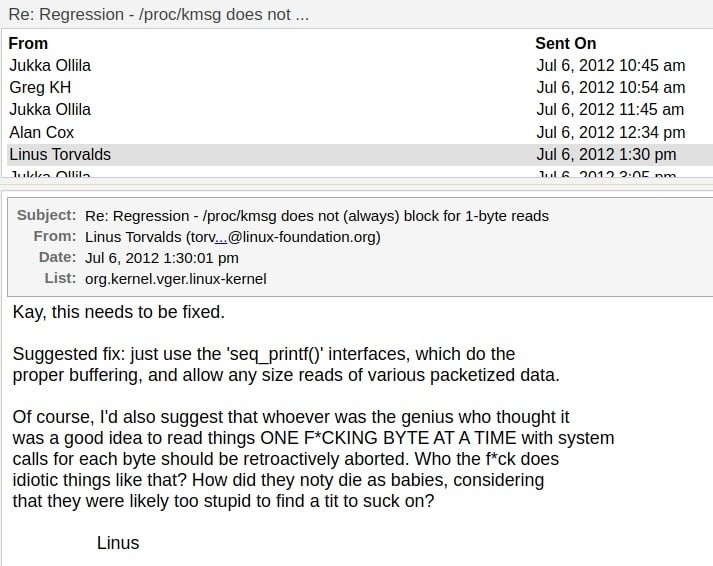

I am thinking of deploying a RAG system to ingest all of Linus's emails, commit messages and pull request comments, and we will have a Linus chatbot.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

Hold on there Satan... let's be reasonable here.

The only time I've seen AI work well are for things like game development, mainly the upscaling of textures and filling in missing frames of older games so they can run at higher frames without being choppy. Maybe even have applications for getting more voice acting done... If the SAG and Silicon Valley can find an arrangement for that that works out well for both parties..

If not for that I'd say 10% reality was being.... incredibly favorable to the tech bros

^^

^^^

I play around with the paid version of chatgpt and I still don't have any practical use for it. it's just a toy at this point.

I use shell_gpt with OpenAI api key so that I don't have to pay a monthly fee for their web interface which is way too expensive. I topped up my account with 5$ back in March and I still haven't use it up. It is OK for getting info about very well established info where doing a web search would be more exhausting than asking chatgpt. But every time I try something more esoteric it will make up shit, like non existent options for CLI tools

ugh hallucinating commands is such a pain

I used chatGPT to help make looking up some syntax on a niche scripting language over the weekend to speed up the time I spent working so I could get back to the weekend.

Then, yesterday, I spent time talking to a colleague who was familiar with the language to find the real syntax because chatGPT just made shit up and doesn't seem to have been accurate about any of the details I asked about.

Though it did help me realize that this whole time when I thought I was frying things, I was often actually steaming them, so I guess it balances out a bit?

It's useful for my firmware development, but it's a tool like any other. Pros and cons.

he isn't wrong

If anything he's being a bit generous.

Like with any new technology. Remember the blockchain hype a few years back? Give it a few years and we will have a handful of areas where it makes sense and the rest of the hype will die off.

Everyone sane probably realizes this. No one knows for sure exactly where it will succeed so a lot of money and time is being spent on a 10% chance for a huge payout in case they guessed right.

There's an area where blockchain makes sense!?!

It has some application in technical writing, data transformation and querying/summarization but it is definitely being oversold.

Yep, Ik ai should die someday.

Linus is known for his generosity.

Linus is a generous man.

Dude...

What?

True. 10% is very generous.

Copilot by Microsoft is completely and utterly shit but they're already putting it into new PCs. Why?

Investors are saying they'll back out if no AI in products. So tech leaders will talk talk and all deal with ai.

Copilot + Pcs tho...

I think when the hype dies down in a few years, we'll settle into a couple of useful applications for ML/AI, and a lot will be just thrown out.

I have no idea what will be kept and what will be tossed but I'm betting there will be more tossed than kept.

AI is very useful in medical sectors, if coupled with human intervention. The very tedious works of radiologists to rule out normal imaging and its variants (which accounts for over 80% cases) can be automated with AI. Many of the common presenting symptoms can be well guided to diagnosis with some meticulous use of AI tools. Some BCI such as bioprosthosis can also be immensely benefitted with AI.

The key is its work must be monitored with clinicians. As much valuable the private information of patients is, blindly feeding everything to an AI can have disastrous consequences.

That's about right. I've been using LLMs to automate a lot of cruft work from my dev job daily, it's like having a knowledgeable intern who sometimes impresses you with their knowledge but need a lot of guidance.

watch out; i learned the hard way in an interview that i do this so much that i can no longer create terraform & ansible playbooks from scratch.

even a basic api call from scratch was difficult to remember and i'm sure i looked like a hack to them since they treated me as such.

I mean, interviews have always been hell for me (often with multiple rounds of leetcode) so there's nothing new there for me lol

Same here but this one was especially painful since it was the closest match with my experience I've ever encountered in 20ish years and now I know that they will never give me the time of day again and; based on my experience in silicon valley; may end up on a thier blacklist permanently.

Blacklists are heavily overrated and exaggerated, I'd say there's no chance you're on a blacklist. Hell, if you interview with them 3 years later, it's entirely possible they have no clue who you are and end up hiring you - I've had literally that exact scenario happen. Tons of companies allow you to re-apply within 6 months of interviewing, let alone 12 months or longer.

The only way you'd end up on a blacklist is if you accidentally step on the owners dog during the interview or something like that.

Being on the other side of the interviewing table for the last 20ish years and being told that we're not going to hire people that everyone unanimously loved and we unquestionably needed more times that I want to remember makes me think that blacklists are common.

In all of the cases I've experienced in the last decade or so: people who had faang and old silicon on their resumes but couldn't do basic things like creating an ansible playbook from scratch were either an automatic addition to that list or at least the butt of a joke that pervades the company's cool aide drinker culture for years afterwards; especially so in recruiting.

Yes they'll eventually forget and I think it's proportional to how egregious or how close to home your perceived misrepresentation is to them.

I think I've probably only ever been blacklisted once in my entire career, and it's because I looked up the reviews of a company and they had some very concerning stuff so I just ghosted them completely and never answered their calls after we had already begun to play a bit of phone tag prior to that.

In my defense, they took a good while to reply to my application and they never sent any emails just phone calls, which it's like, come on I'm a developer you know I don't want to sit on the phone all day like I'm a sales person or something, send an email to schedule an interview like every other company instead of just spamming phone calls lol

Agreed though, eventually they will forget, it just needs enough time, and maybe you'd not even want to work there.

In addition, there have been these studies released (not so sure how well established, so take this with a grain of salt) lately, indicating a correlation with increased perceived efficiency/productivity, but also a strongly linked decrease in actual efficiency/productivity, when using LLMs for dev work.

After some initial excitement, I’ve dialed back using them to zero, and my contributions have been on the increase. I think it just feels good to spitball, which translates to heightened sense of excitement while working. But it’s really just much faster and convenient to do the boring stuff with snippets and templates etc, if not as exciting. We’ve been doing pair programming lately with humans, and while that’s slower and less efficient too, seems to contribute towards rise in quality and less problems in code review later, while also providing the spitballing side. In a much better format, I think, too, though I guess that’s subjective.

Decided to say something popular after his snafu, I see.

Ai bad gets them every time.

I make DNNs (deep neural networks), the current trend in artificial intelligence modeling, for a living.

Much of my ancillary work consists of deflating tempering the C-suite's hype and expectations of what "AI" solutions can solve or completely automate.

DNN algorithms can be powerful tools and muses in scientific endeavors, engineering, creativity and innovation. They aren't full replacements for the power of the human mind.

I can safely say that many, if not most, of my peers in DNN programming and data science are humble in our approach to developing these systems for deployment.

If anything, studying this field has given me an even more profound respect for the billions of years of evolution required to display the power and subtleties of intelligence as we narrowly understand it in an anthropological, neuro-scientific, and/or historical framework(s).

As a fervent AI enthusiast, I disagree.

...I'd say it's 97% hype and marketing.

It's crazy how much fud is flying around, and legitimately buries good open research. It's also crazy what these giant corporations are explicitly saying what they're going to do, and that anyone buys it. TSMC's allegedly calling Sam Altman a 'podcast bro' is spot on, and I'd add "manipulative vampire" to that.

Talk to any long-time resident of localllama and similar "local" AI communities who actually dig into this stuff, and you'll find immense skepticism, not the crypto-like AI bros like you find on linkedin, twitter and such and blot everything out.