this post was submitted on 05 Feb 2024

667 points (87.9% liked)

Memes

45689 readers

709 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

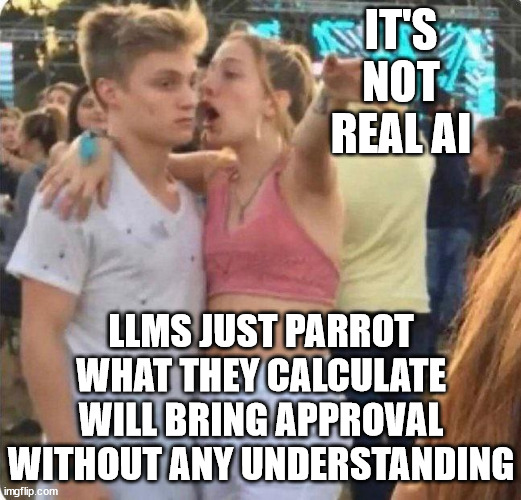

Ok, but so do most humans? So few people actually have true understanding in topics. They parrot the parroting that they have been told throughout their lives. This only gets worse as you move into more technical topics. Ask someone why it is cold in winter and you will be lucky if they say it is because the days are shorter than in summer. That is the most rudimentary "correct" way to answer that question and it is still an incorrect parroting of something they have been told.

Ask yourself, what do you actually understand? How many topics could you be asked "why?" on repeatedly and actually be able to answer more than 4 or 5 times. I know I have a few. I also know what I am not able to do that with.

I don't think actual parroting is the problem. The problem is they don't understand a word outside of how it is organized. They can't be told to do simple logic because they don't have a simple understanding of each word in their vocabulary. They can only reorganize things to varying degrees.

https://en.m.wikipedia.org/wiki/Chinese_room

I think they're wrong, as it happens, but that's the argument.

I guess, I just am looking at from an end user vantage point. I'm not saying the model cant understand the words its using. I just don't think it currently understands that specific words refer to real life objects and there are laws of physics that apply to those specific objects and how they interact with each other.

Like saying there is a guy that exists and is a historical figure means that information is independently verified by physical objects that exist in the world.

In some ways, you are correct. It is coming though. The psychological/neurological word you are searching for is "conceptualization". The AI models lack the ability to abstract the text they know into the abstract ideas of the objects, at least in the same way humans do. Technically the ability to say "show me a chair" and it returns images of a chair, then following up with "show me things related to the last thing you showed me" and it shows couches, butts, tables, etc. is a conceptual abstraction of a sort. The issue comes when you ask "why are those things related to the first thing?" It is coming, but it will be a little while before it is able to describe the abstraction it just did, but it is capable of the first stage at least.