You're referring to using only fedi-safety instead of pictrs-safety, as was mentioned in §"For other fediverse software admins", here, right?

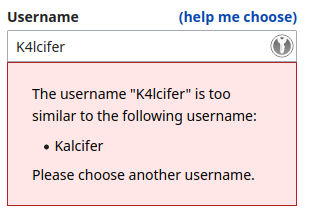

Kalcifer

One thing you’ll learn quickly is that Lemmy is version 0 for a reason.

Fair warning 😆

One problem with a big list is that different instances have different ideas over what is acceptable.

Yeah, that would be where being able to choose from any number of lists, or to freely create one comes in handy.

create from it each day or so yo run on the images since it was last destroyed.

Unfortunately, for this usecase, the GPU needs to be accessible in real time; there is a 10 second window when an image is posted for it to be processed [1].

References

- "I just developed and deployed the first real-time protection for lemmy against CSAM!". @[email protected]. [email protected]. Divisions by zero. Published: 2023-09-20T08:38:09Z. Accessed: 2024-11-12T01:28Z. https://lemmy.dbzer0.com/post/4500908.

- §"For lemmy admins:"

[...]

- fedi-safety must run on a system with GPU. The reason for this is that lemmy provides just a 10-seconds grace period for each upload before it times out the upload regardless of the results. [1]

[...]

- §"For lemmy admins:"

Probably the best option would be to have a snapshot

Could you point me towards some documentation so that I can look into exactly what you mean by this? I'm not sure I understand the exact procedure that you are describing.

[...] if you’re going to run an instance and aren’t already on Matrix, make an account. It’s how instance admins tend to keep in contact with each other.

This is good advice.

Fediseer provides a web of trust. An instance receives a guarantee from another instance. That instance then guarantees another instance. It creates a web of trust starting from some known good instances. Then if you wish you can choose to have your lemmy instance only federate with instances that have been guaranteed by another instance. Spam instances can’t guarantee each other, because they need an instance that is already part of the web to guarantee them, and instances won’t do that because they risk their own place in the web if they falsely guarantee another instances (say, if one instance keeps guaranteeing new instances that turn out to be spam, they will quickly lose their own guarantee).

How would one get a new instance approved by Fediseer?

There is a chat room where instance admins share details of spam accounts, and it’s about the best we have for Lemmy at the moment (it works quite well, really, because everyone can be instantly notified but also make their own decisions about who to ban or if something is spam or allowed on their instance - because it’s pretty common that things are not black and white).

Yeah I think I'm more on the side of this, now. The chat is a decent, and workable solution. It's definitely a lot more hands-on/manual, but I think it's a solid middle ground solution, for the time being.

If you ban a user [...], then if you are automating this you end up with the issue of if anyone screws up then how do you get someone’s account unbanned on all those instances?

The idea would be that if they are automatically banned, then the removal of the user from the list would then cause them to be automatically unbanned. That being said, you did also state:

If you ban a user and opt to remove all their content (which you should, with spam)

How do you get all their content restored

To which I say that I hadn't considered that the content would be deleted 😜. I was assuming that the user would only be blocked, but their content would still be physically on the server — it would just be effectively invisible.

how do you trust all these people to never, ever, ever get it wrong?

The naively simple idea was that the banned user could open an appeal to get their name removed from the blocklist. Also, keep in mind that the community's trust in the blocklist is predicated on the blocklist being accurate.

The first one is who controls it?

Ideally, nobody. Anyone could make their own blocklist, and one could choose to pull from any of them.

Yeah, that was poor wording on my part — what I mean to say is that there would be unvetted data flowing into my local network and being processed on a local machine. It may be overparanoia, but that feels like a privacy risk.