this post was submitted on 01 Sep 2023

920 points (96.1% liked)

Memes

45550 readers

1605 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

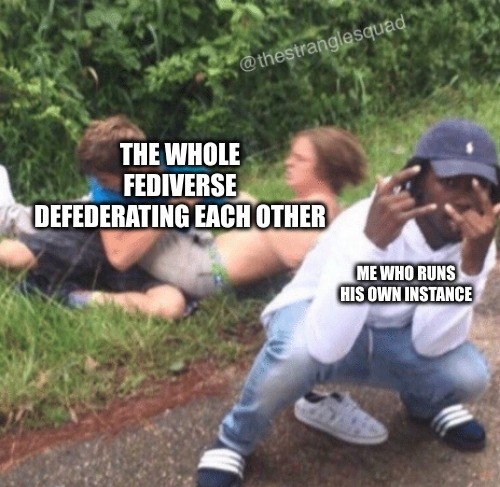

On feddit.de, lemmy.world is only temporarily defederated because of CSAM until a patch is merged into Lemmy that prevents images from being downloaded to your own instance.

So I'll just be patient and wait. It's understandable the admins don't want to get problems with law enforcement.

Won't that lead to some horrible hug-of-death type scenarios if a post from a small instance gets popular on a huge one?

We need more decentralization, a federated image/gif host with CSAM protections

How would one realize CSAM protection? You'd need actual ML to check for it, and I do not think there are trained models available. And now find someone that wants to train such a model, somehow. Also, running an ML model would be quite expensive in energy and hardware.

There are models for detecting adult material, idk how well they’d work on CSAM though. Additionally, there exists a hash identification system for known images, idk if it’s available to the public, but I know apple has it.

Idk, but we gotta figure out something