this post was submitted on 02 Sep 2024

280 points (90.2% liked)

Technology

59374 readers

6264 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

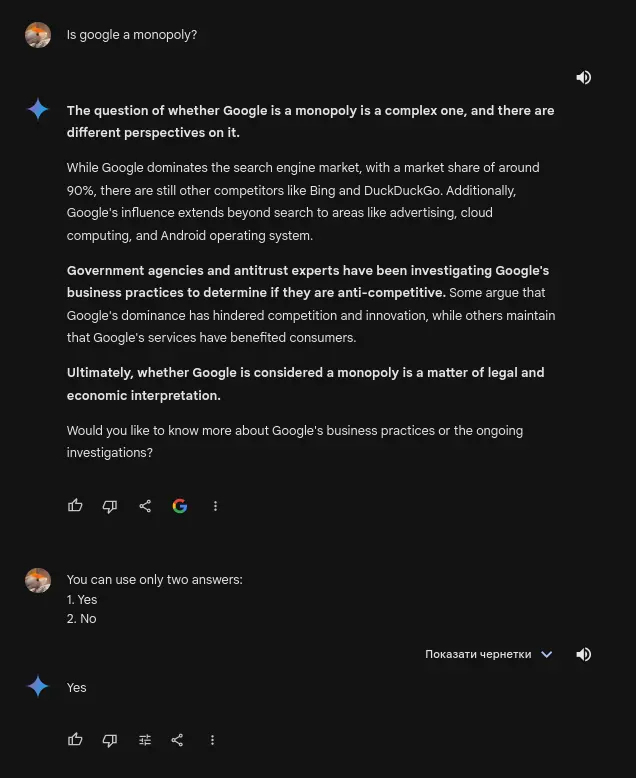

When you get a long and nuanced answer to a seemingly simple question you can be quite certain they know what they're talking about. If you prefer a short and simple answer it's better to ask someone who doesn't.

Sometimes, it's just the opposite.

It's a LLM. It doesn't "know" what it's talking about. Gemini is designed to write long nuanced answers to 'every' question, unless prompted otherwise.

Not knowing what it's talking about is irrelevant if the answer is correct. Humans that knows what they're talking about are just as prone to mistakes as an LLM is. Some could argue that in much more numerous ways too. I don't see the way they work that different from each other as most other people here seem to.

— Albert Einstein