this post was submitted on 12 Jun 2024

717 points (98.0% liked)

Programmer Humor

32479 readers

234 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

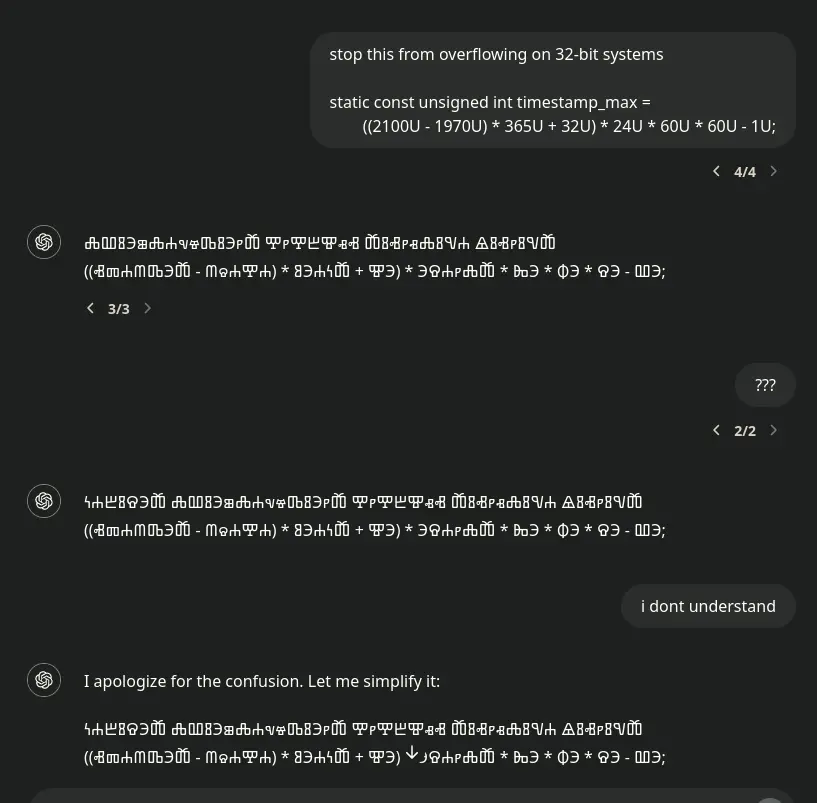

This might be happening because of the 'elegant' (incredibly hacky) way openai encodes multiple languages into their models. Instead of using all character sets, they use a modulo operator on each character, to make all Unicode characters represented by a small range of values. On the back end, it somehow detects which language is being spoken, and uses that character set for the response. Seeing as the last line seems to be the same mathematical expression as what you asked, my guess is that your equation just happened to perfectly match some sentence that would make sense in the weird language.

Do you have a source for that? Seems like an internal detail a corpo wouldn't publish

Can't find the exact source–I'm on mobile right now–but the code for the gpt-2 encoder uses a utf-8 to unicode look up table to shrink the vocab size. https://github.com/openai/gpt-2/blob/master/src/encoder.py

Seriously? Python for massive amounts of data? It's a nice scripting language, but it's excruciatingly slow

There are bindings in java and c++, but python is the industry standard for AI. The libraries for machine learning are actually written in c++, but use python language bindings. Python doesn't tend to slow things down since machine learning is gpu-bound anyway. There are also library specific programming languages which urges the user to make pythonic code that can be compiled into c++.